FYP-DevLog-012

Introduction

Hey, guys! Welcome back to another dev-log entry for my final year project (FYP) after months of hiatus during the semester break. Even though I say that it's a hiatus, a lot has changed since my last update — and I mean every sense of that word.

In case you are unaware, my partner and I took the opportunity to progress through our FYP as much as we can during the semester break in order to have a head start for Final Year Project 2 (FYP2) in our final semester.

Fast forward 3 months later, and I'm already done with the first week of my final semester. In my last post, I promised that I will continue this series once the new semester commences.

I'm sure you guys are eager to know our current progress compared to where we left off. Without further ado, here are some of the progress highlights throughout the semester break!

Progress Highlights

Project Research / Discussion

General

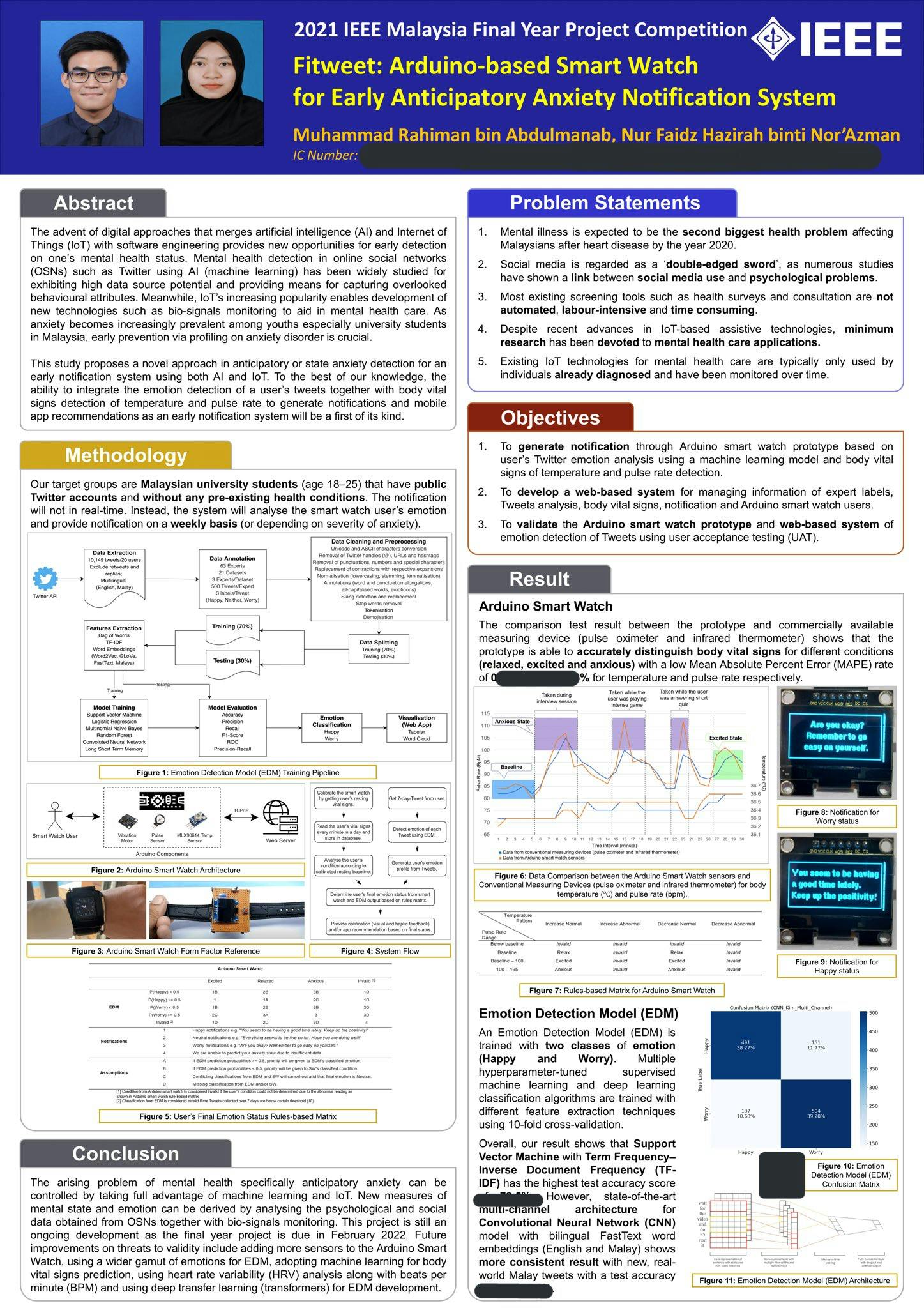

Participated in the IEEE 2021 Malaysia Final Year Project (FYP) Competition:

- It is open for all undergraduate students (IEEE Member and Non-Member) who have just completed or is currently doing their FYP in 2021.

- It is open for all undergraduate students (IEEE Member and Non-Member) who have just completed or is currently doing their FYP in 2021.

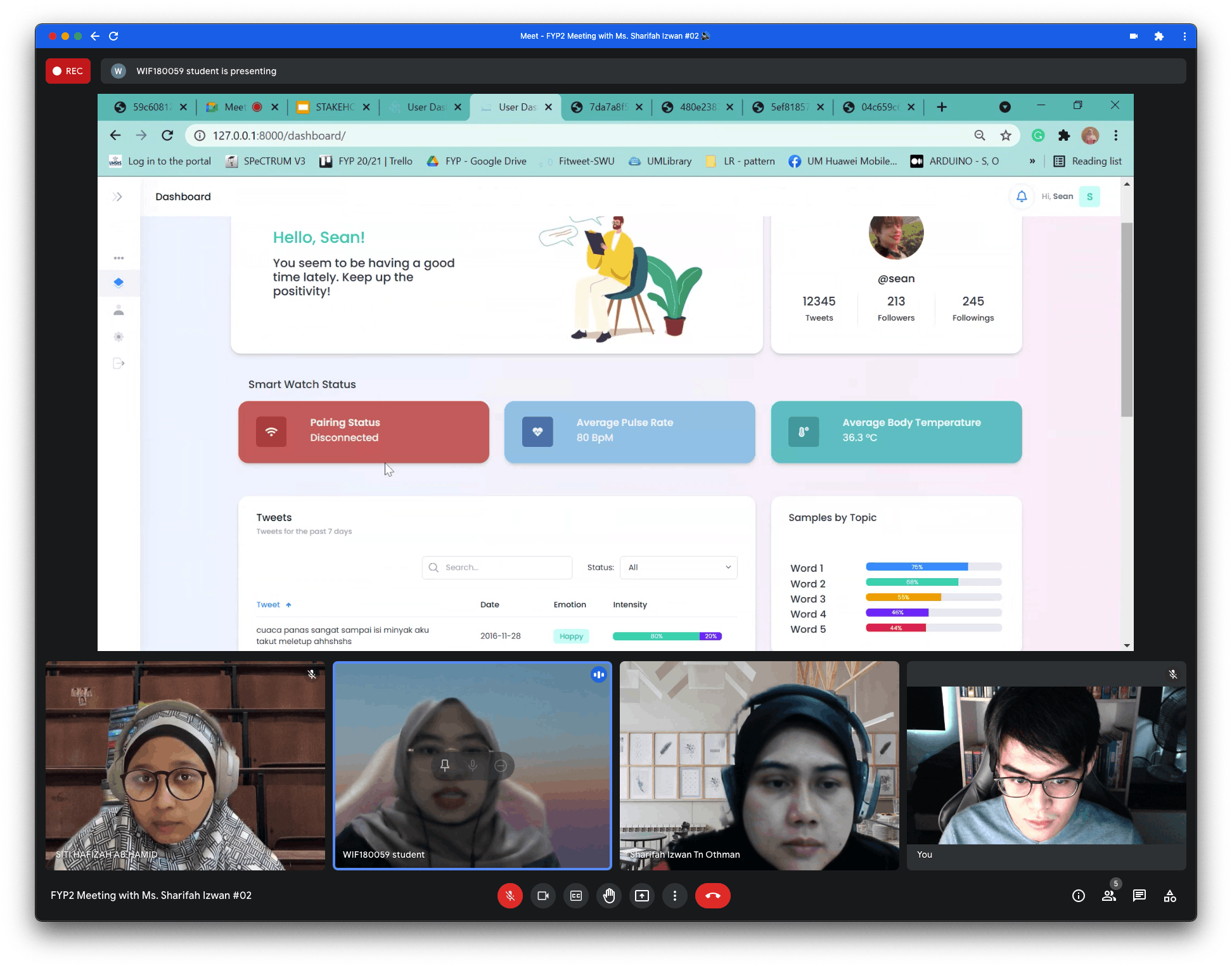

Organised first FYP2 meeting with stakeholder, Ms. Sharifah Izwan.

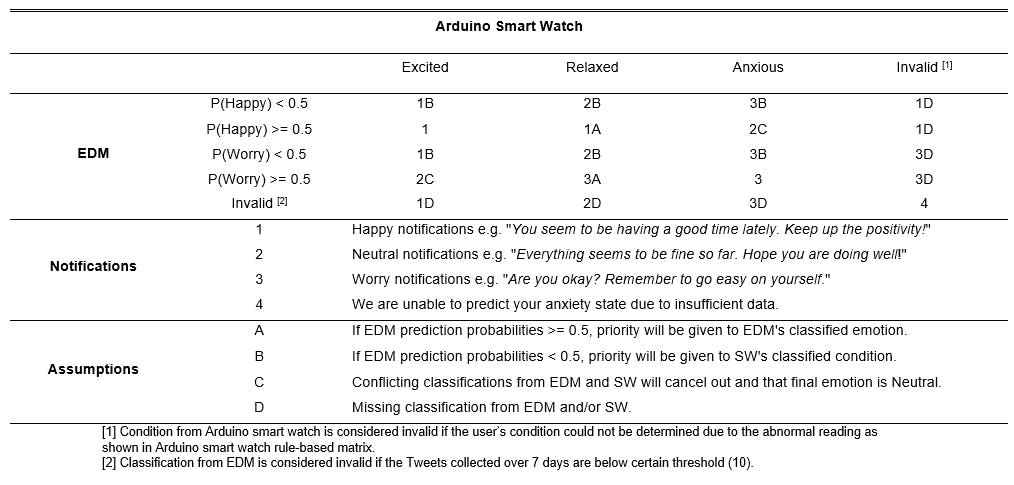

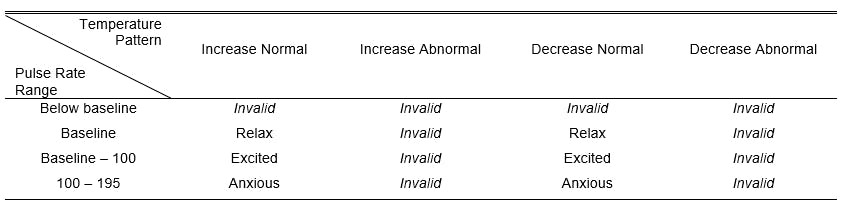

Drafted the final rules matrix for combining the output of EDM and Arduino Smart Watch

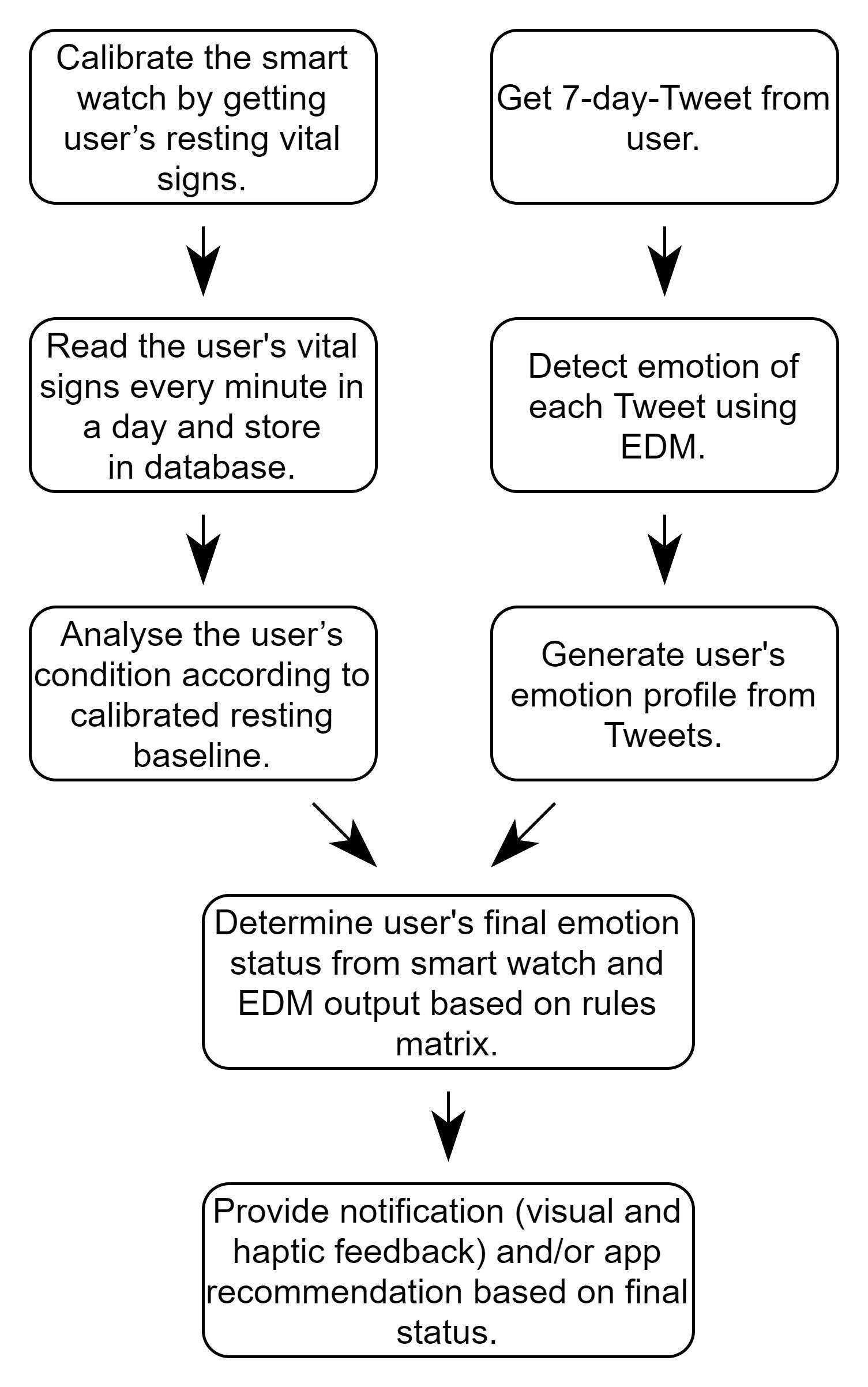

Drafted Fitweet's full system flow diagram:

Arduino Smart Watch

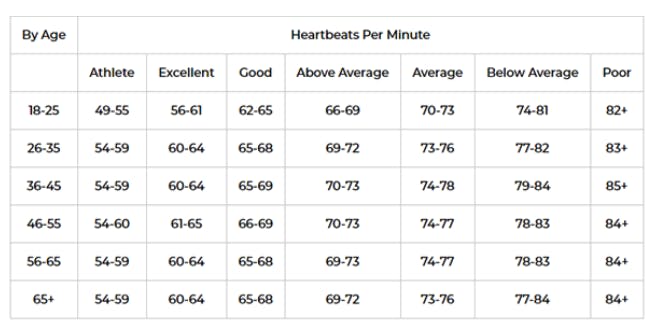

Read multiple literature reviews on determining baseline of body vital signs (temperature and pulse-rate) based on age and for the purpose of detecting anxiety:

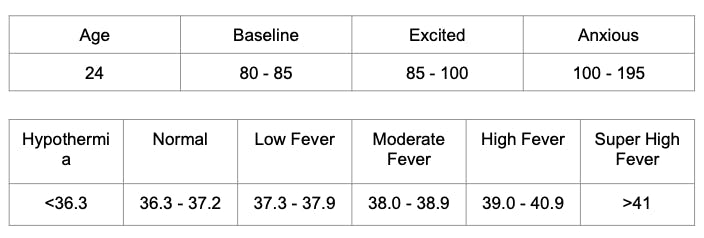

Updated the system architecture of the Arduino Smart Watch with new and more accurate sensors:

Emotion Detection Model (EDM)

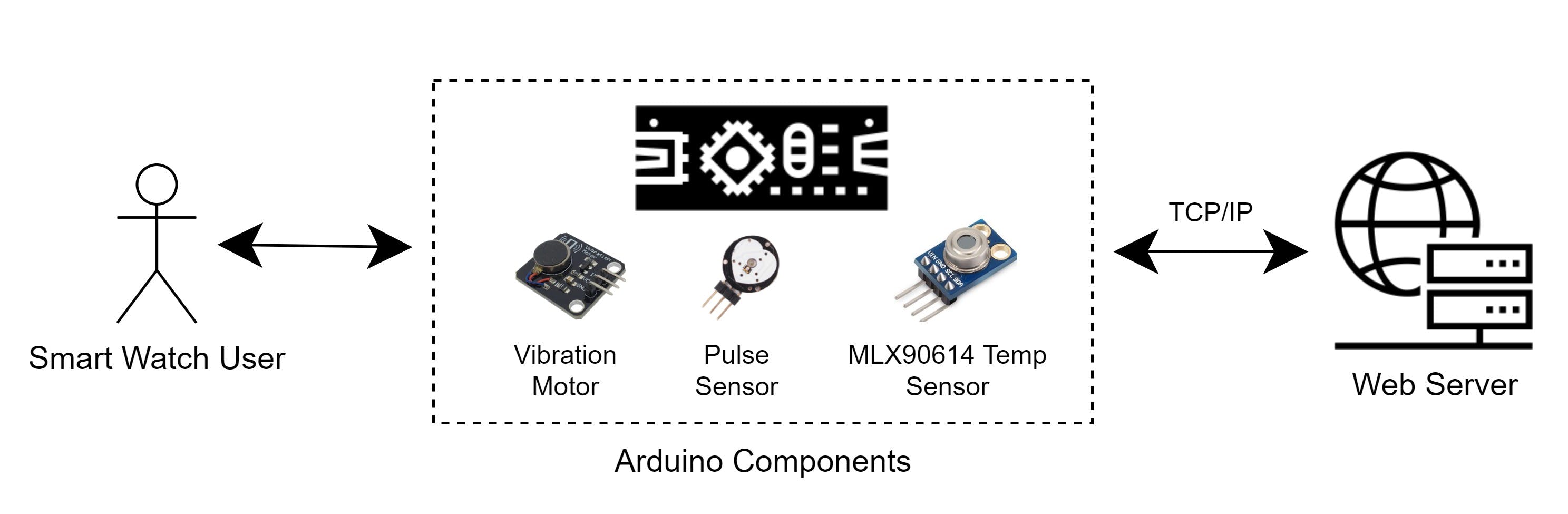

Read several literature reviews on text data cleaning and preprocessing methods:

- Referred mostly on research by Symeonidis, S., Effrosynidis, D., & Arampatzis, A. (2018) and Baziotis et al. (2017).

- Symeonidis et al. (2018) provides an experimental comparison of sixteen preprocessing techniques for Sentiment Analysis, as shown in the figure below:

- The paper outlines some recommended techniques to use such as replacing URLs and user mentions, removing numbers, replacing punctuation repetitions and contractions as well as lemmatization

- GitHub repository of the research: Deffro/text-preprocessing-techniques

- Baziotis et al. (2017). open-sourced their data preprocessing pipeline called ekphrasis (ekphrasis) which was used their team's submission of the annual International Workshop on Semantic Evaluation (SemEval) 2017 Task 4 (English), Sentiment Analysis in Twitter.

Drafted a table of comparison between my own text preprocessing pipeline with state-of-the-art ones such as

ekphrasisandmalaya:

| Features | Fitweet | Ekphrasis | Malaya |

| Demojisation support | Yes | No | No |

| Emoticons annotation | Yes | Yes | No |

| Bad unicode fix | Yes | Yes | Yes |

| ASCII characters conversion | Yes | No | No |

| Elongated words annotation | No | Yes | Yes |

| Elongated punctuations annotation | Yes | Yes | Yes |

| Specific punctuations annotation | Yes (for !, ? and .) | No (generalised) | No (generalised) |

| Word segmentation | No | Yes | Yes |

| Hashtags annotation and segmentation | No | Yes | Yes |

Open-close tag for precise annotation e.g. <allcaps></allcaps> | No | Yes | Yes |

| Spelling correction | No | Yes | Yes |

| Slang detection | Yes (using custom corpus) | Yes (using SocialTokenizer()) | No |

| Stemming/Lemmatization | Yes | No | Yes |

| English contractions expansion | Yes | Yes* | Yes |

| Stop-words removal | Yes | Yes | No |

| Translation support | No | No | Yes (EN-MS) |

| Information-bearing expressions identification e.g. currencies, datetime formats, phone numbers, urls | No | Yes (limited to English context)** | Yes |

| Overall performance | Slow | Fast* | Fast |

Outlined several conclusions from research findings and testing:

- Despite

fitweet_ekphrasishaving the best balance between performance and number of preprocessing techniques applied, as of the time of this writing, I have decided to go for my ownfitweetpipeline since it manages to yield higher accuracy overall. - This not-so-scientific test may have proven Symeonidis et al. (2018)`s point that not all text preprocessing techniques may yield positive results. Adopting an exhaustive approach to use as many techniques as possible may not be the ideal case.

- In terms of FYP, I think the panels would appreciate more if I were to use my own text preprocessing pipeline instead of merely adopting others.

- Despite

Updated the EDM training flow diagram:

Combined Fitweet's labelled dataset with new Neutral data from data labelled as Bored and additional Happy data from another researcher (Chempaka Seri; a master student of my supervisor) dubbed as Fitweet-Seri:

- 0 = Happy, 1 = Neutral, 2 = Worry

- Dataset has also been balanced

Discussed with supervisor and researcher-slash-mentor Chempaka Seri regarding the issue of using Bored-labelled from Chempaka Seri as Neutral data and how it affects model performance:

- The following classification report of a Logistic Regression model shows that Neutral label has the lowest precision

- This indicates that despite the intention of adding a Neutral dataset and label to cover factual tweets, the dataset used only lowers down the overall model accuracy

- Decided to try using Seri's Relaxed dataset as Neutral instead of her Bored data as well as use her previous model for her research to label my Neither dataset into multiple emotion labels based on Russel's Model of Affect

- The following classification report of a Logistic Regression model shows that Neutral label has the lowest precision

Researched several pre-trained word embedding models for feature extraction:

- Examples include Google's Word2Vec, GLoVe, Malaya's Word2Vec and FastText

- Using pre-trained word embeddings is better as they can leverage massive datasets that you may not have access to, built using billions of different words, with a vast corpus of language that captures word meanings in a statistically robust manner.

Project Development

Arduino Smart Watch

Ran six trials to calculate the baseline for temperature and pulse rate of university students

- Retrieve vital signs during interview, playing game with limited time and guessing songs with limited time sessions.

- Used both Arduino's sensors as well as conventional measuring devices (pulse oximeter and infrared thermometer)

Compiled research findings and baseline testing into a chart

- Proved that the Arduino's sensors are almost on par and reliable with the conventional measuring devices (pulse oximeter and infrared thermometer)

- Proved that correlation exists when it comes to distinguishing a person's anxious state based on their body vital signs especially pulse rate

Drafted the rules matrix for anticipatory anxiety detection using Arduino Smart Watch:

Developed a draft of the notification's UI on the Arduino Smart Watch's OLED screen:

Emotion Detection Model (EDM)

Developed custom text data cleaning and preprocessing pipeline for Fitweet EDM which combines some techniques used in literature reviews

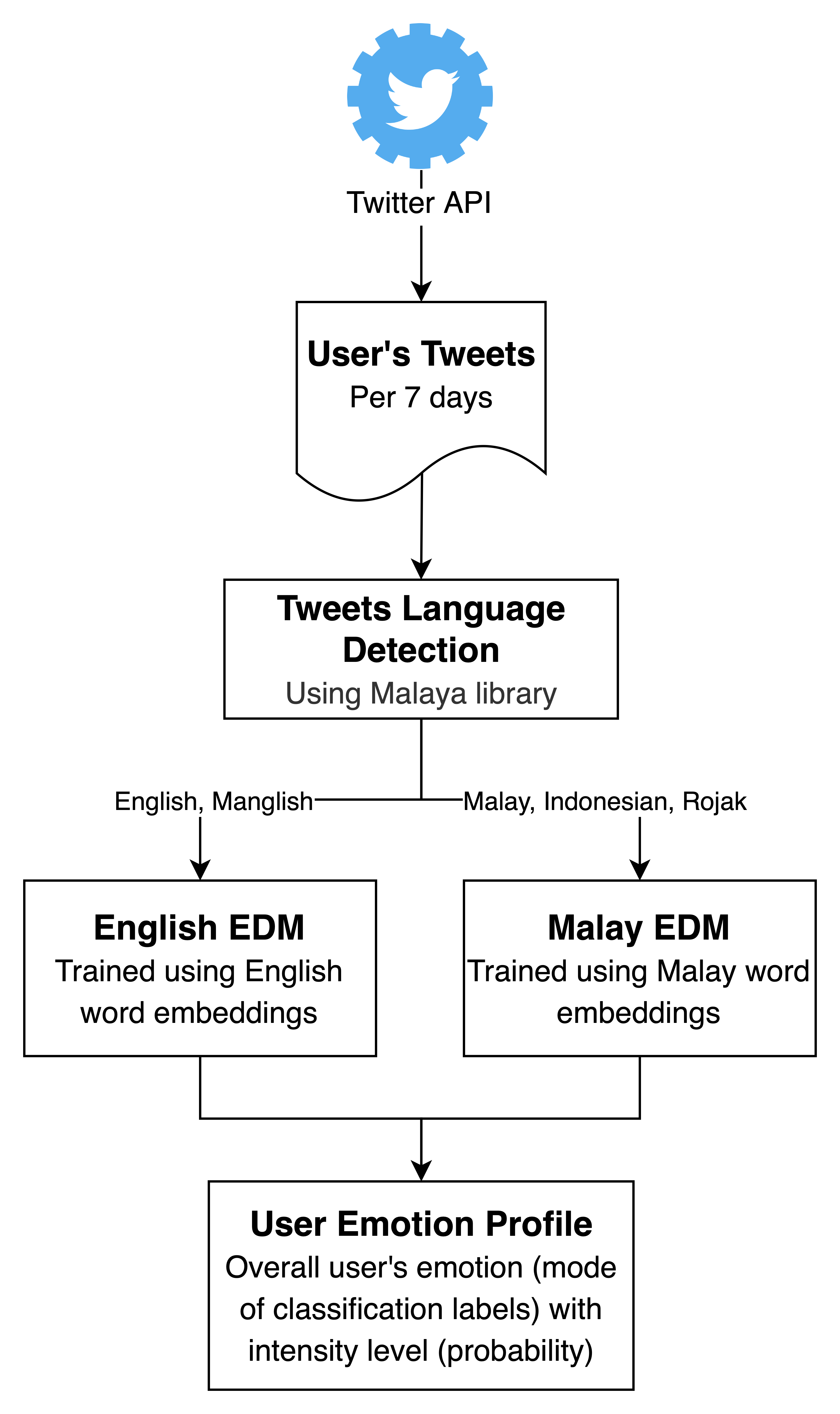

Drafted two different architectures for EDM in order to handle multilingual tweets:

- Using bilingual pipeline i.e. two different machine learning models trained using different language word embeddings. Tweets will be passed to the corresponding model based on its detected language):

- Using a single model trained with both English and Malay word embeddings. Involves complex deep learning (e.g. bi-directional, LSTM, attention) and although it is objectively simpler, initial testing shows that the prediction time is rather slow.

- Using bilingual pipeline i.e. two different machine learning models trained using different language word embeddings. Tweets will be passed to the corresponding model based on its detected language):

Developed code for creating the embedding layer from pre-trained word embedding models using

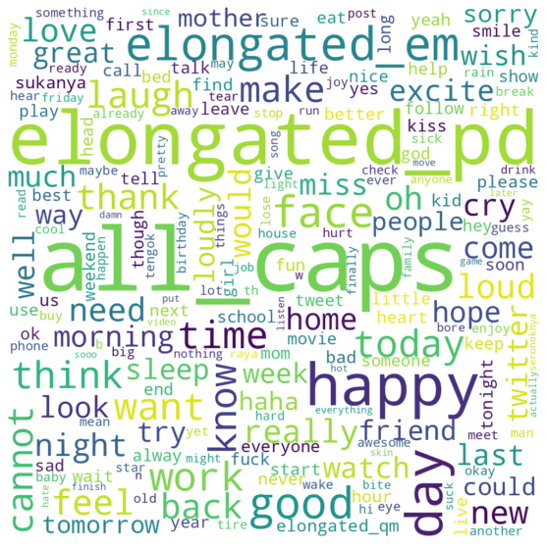

tensorflowandkerasfor deep learning model trainingsDeveloped code for producing the word cloud of dataset using

wordcloudpackage- Fitweet-Seri's dataset word cloud using Fitweet's data preprocessing pipeline

- Fitweet-Seri's dataset word cloud using Fitweet's data preprocessing pipeline

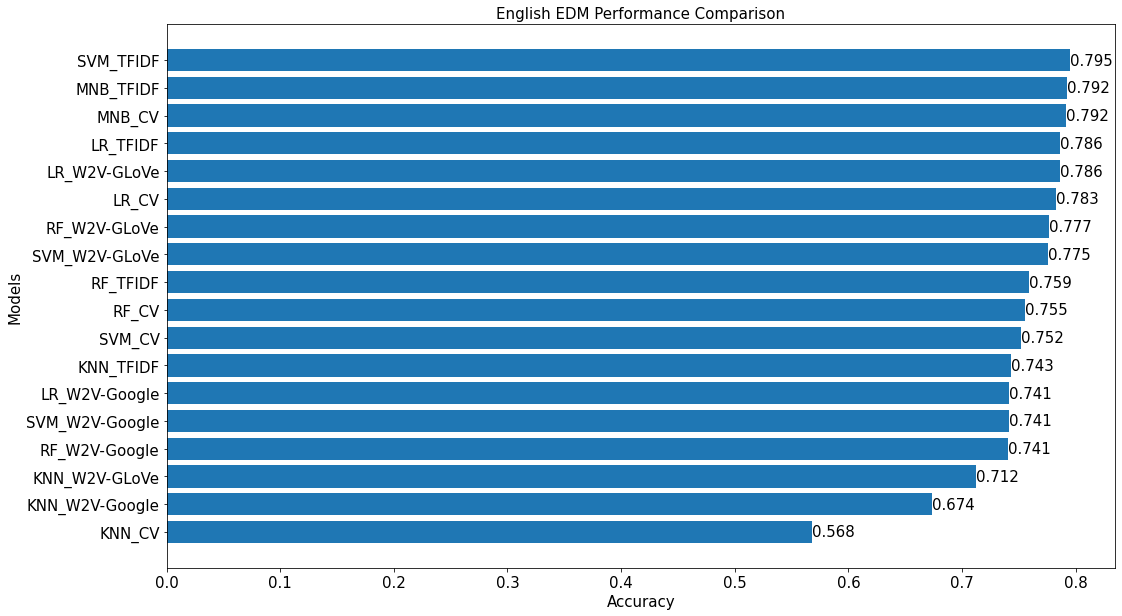

Hyperparameter-tuned multiple conventional/traditional machine learning models such as SVM, Logistic Regression, Random Forest, Multinomial Naïve Bayes etc. prior to training.

Developed and tested machine learning models using common conventional/traditional algorithms such as SVM, Logistic Regression, Random Forest, Multinomial Naïve Bayes etc. using various feature extraction methods such as TF-IDF and word embeddings:

- Model accuracy performance comparison:

- Note that the title of the bar chart above is a bit misleading as some of the models are not trained using English word embeddings such as Word2Vec and GLoVe and instead using Bag-of-Words (BOW) approach that ranks terms from the dataset itself (which contains a hodgepodge of different languages and slangs

- Model accuracy performance comparison:

Developed and tested machine learning models using deep learning architectures such as Convoluted Neutral Network (CNN) and Long Short Term Memory (LSTM)

- Deep learning models tend to have slightly lower accuracies compared to conventional ones, but this is mostly due to lack of data

- However, these deep learning models can generalise better and can label unseen real-world tweets more consistently and accurately during initial validation testing

- Proper validation testing should be done in the future